background

In layman's terms, any machine learning problem can be equivalent to a problem finding a suitable transformation function. For example, speech recognition is to find a suitable transformation function to transform the input one-dimensional time-series speech signal into the semantic space; and the Go artificial intelligence AlphaGo, which has recently attracted the attention of the whole people, transforms the input two-dimensional layout image into the decision space. Determine the optimal way to move in the next step; correspondingly, face recognition is also to find a suitable transformation function, transform the input two-dimensional face image into the feature space, so as to uniquely determine the identity of the corresponding person.

In the development history of web application attack detection, so far, it basically relies on the rule blacklist detection mechanism, whether it is a web application firewall or ids, etc., mainly relies on the built-in rules of the detection engine to perform message matching. Although it can withstand most attacks, we believe that it has the following problems:

The rule base is difficult to maintain, the personnel hand over the work, and even for a long time, the original author is difficult to understand the rules written at the beginning. Once a false positive occurs, the online modification is very difficult.

The rules are too broad and easy to kill by mistake, and the writing is too thin and easy to bypass.

For example, a regular statement that detects sql injection is as follows:

Stringinj_str = "'|and|exec|insert|select|delete|update|count|*|%|chr|mid|master|truncate|char|declare|;|or|-|+|,";

A normal comment, "I was dirty in the selected shirt," was accidentally killed.

The regular engine seriously affects performance, especially when there are too many regular rules. For example, we have encountered a serious accumulation of traffic to be detected in kafka.

So how do you solve the above problem? Especially in large Internet companies, how to identify malicious attack requests quickly and accurately in massive requests has become a difficult problem for us.

Recently, the application of machine learning in information security has attracted a lot of attention. We believe that any information security field that needs to process data and make analysis and prediction can use machine learning. This article will introduce the path of machine learning practice in the field of web attack identification by Ctrip Information Security.

Malicious attack detection system nile architecture introduction

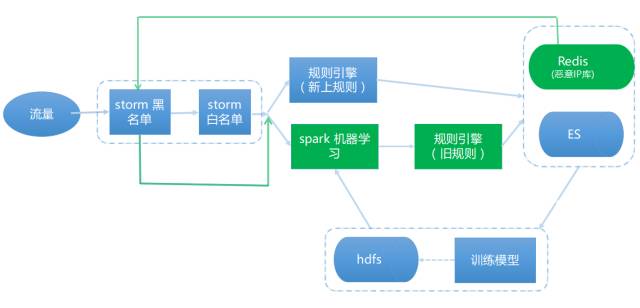

Figure 1: Ctrip Nile Attack Detection System Architecture First Edition

First, let's briefly introduce the initial architecture of the Ctrip attack detection system nile. As shown in Figure 1, before we enter the rule engine (here, the regular match engine), we use the whitelist to filter out more than 97% of the normal traffic (we think For example, http://ctrip.com/flight?Search?key=value, as long as there is no English punctuation and control characters in the value parameter value are "normal traffic", in addition to Ctrip's export ip traffic, etc.).

The remaining 3% of the traffic is over the regular rule engine. If the result is black (malicious attack), it will be sent to the vulnerability automated verification system hulk (for hulk introduction, please refer to https://zhuanlan.zhihu.com/p/28115732), for example Call sqlmap to replay the traffic and re-examine whether the attacker can successfully attack.

At present, the nile system has been improved to the fifth edition. The architecture is shown in Figure 2. The most important change is to add the spark machine learning engine before the rules engine. Currently, the spark mllib library is used for modeling and prediction. If the machine learning engine is black, it will continue to be thrown to the regular rule engine for a second check. If the retest is still black, it will be thrown to the hulk vulnerability verification system.

Figure 2: The latest version of the Ctrip Nile attack detection system architecture

This brings the following benefits:

Machine learning is faster, filtering out most of the traffic and throwing it at the regular engine. Solved the problem that the past regularity caused serious kafka accumulation (even if it was 3% of the original traffic).

You can compare the results of the regular engine and the machine learning engine to check each other for missing traps. For example, we can find regular omissions or false positives, and manually modify or supplement existing regular libraries. If the machine learns false positives, the white traffic is identified as black. The first thing to think about is whether the black sample is impure, and the feature extraction is problematic.

What if the machine learns to miss the report? According to the flow of Figure 2, we don't know what we missed. The most straightforward idea is to tie the machine learning engine and the regular engine to check for missing traps, but this violates our premise of pursuing efficiency.

In a recent version we added a dynamic ip blacklist, a high-risk ip focused on multiple hits in the time window, directly ignoring the storm whitelist. In practice, we borrowed this part of the black ip traffic to supplement our learning samples (black ip traffic is more than 99% of the attack traffic), we found referer, ua injection, etc., other found other logical attacks Traces, such as order traversal and so on.

Some people may ask, according to the above structure, if the other party attacks the attack with the new outpod attack poc, only attack 1 time, is it not detected? First of all, if there are still a lot of special English punctuation and sensitive words in the poc, we can still detect it; if another situation is really missing, then what should we do? At this time, only humans can write new rules and join the detection logic. As shown in Figure 2, we added the "rule engine (new rules)" to directly detect, after continuous tagging spit to the es log, the new attack log can be used as a black sample for learning, and so on.

Comparison of the effects before and after joining the machine learning: kafka consumption flow: 10,000 / min -> 4 million +, after the white list of detection: 10,000 / min -> 100,000 +.

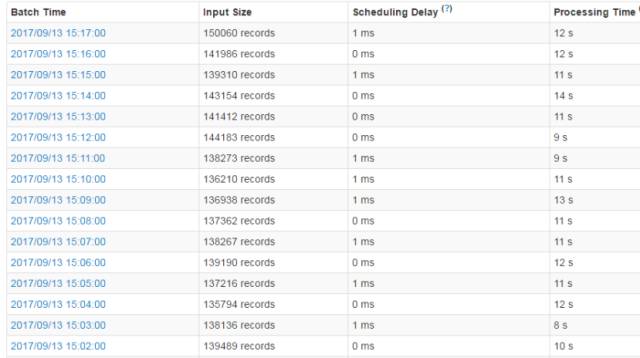

We set a batch of one minute consumption, 100,000+ data per minute, and it took only about 10 seconds to process it, so if we shorten the consumption batch window, we can theoretically increase it by 5-6 times. Throughput, as shown in Figure 3.

Figure 3: Storm processing speed under the new architecture

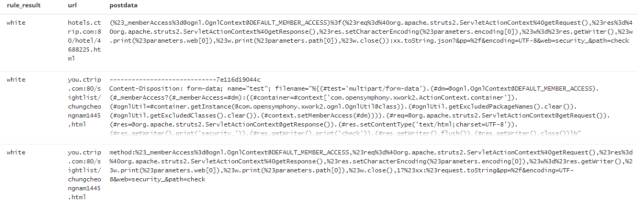

Let us first look at the recognition result of a machine learning, as shown in Figure 4 below:

Figure 4: Machine learning es record log

The rule_result tag is a regular recognition result. Since we did not add the regularity of the struts2 attack at the time, the ES log results show that the machine learning engine still detected the attack.

After introducing the architecture and returning to machine learning itself, the following section describes how to build a machine learning model for web attack detection. In general, applying machine learning to solve practical problems is divided into the following four steps:

Defining the target problem

Collecting data and feature engineering

Training models and evaluating model effects

Online application and continuous optimization

Defining the target problem

Core target issues:

Two classification problem, predicting traffic is attack or normal

The false negative rate must be <10% or more (here, we believe that false negatives are more serious than false positives. False positives can also be corrected by the second level of the regular engine)

The model prediction speed must be fast, for example, the knn nearest neighbor algorithm with sorting is excluded from us.

Machine learning is used in the field of information security. The first difficulty is the lack of tag data. Thanks to the fact that our ES logs have the real production flow of regular tags, so we decided to use the two classification based on supervised learning to model . The purpose of supervised learning is to learn from many tagged samples and then make predictions about new data. Of course, some people have proposed unsupervised ideas, establish a normal traffic model, and those that do not conform to the model are identified as malicious. For example, using cluster analysis, this article will not discuss further.

No machine learning model can solve all the problems. We can learn from previous experiences, such as Bayesian for spam recognition and HMM for speech recognition. Specific algorithm comparison can be found at https://s3-us-west-2.amazonaws.com/mlsurveys/54.pdf

To clarify the goals we need to achieve, the following begins to consider "collecting data and feature engineering", which is also the most critical step for us to consider the success or failure of the model.

Collecting data and feature engineering

We write a script to take ES black and white data according to the time of day, and store it separately, plus the alarm log of self-research waf, and the poc collected online. So far, our training raw materials are ready. In addition, special attention should be paid to: get request and post request. We extract features separately and model them separately. As for why readers should think for themselves.

When I started the local experiment, I chose the sklearn library of python. The black and white data of the training samples were 10w+ data, which reached a balanced ratio of 1 to 1. When the project went live, we used spark mllib to do it. For the convenience of introduction, this article is introduced in python+sklean.

Let's talk about "feature engineering." We believe that "characteristic engineering" is the most important part of the machine model. It is more like an art. It often relies on the expert's "intuition" and professional field experience. Even more, some people ridicule machine learning is actually feature engineering. Can you trust the NBA Finals prediction model modeled by someone who has never looked at the NBA?

Due to space limitations, here are the steps to consider the “characteristic engineering†that is considered important in the project:

Feature refinement:

Core requirements: What effective information is extracted from the training data, and how is this information organized?

Let's look at the difference between the attack statement and the normal statement in the ES log, as follows:

Attack statement: flights.ctrip.com/Process/checkinseat/index?tpl_content= &name=test404.php&dir=index/../../../..¤t_dir=tpl

Normal statement: flights.ctrip.com/Process/checkinseat/index?tpl_content=hello,world!

Obviously, the most obvious feature we see in the attack statement is that it contains characters such as eval, ../, punctuation, and the normal statement we see contains English commas, exclamation marks, etc., so we can list the number of eval as a Feature dimension. In the actual processing, we ignore the uri, only take the value in the value parameter to mention the feature. For example, the above two statements, flight.ctrip.com/Process/checkinseat/index?tpl_content, have been ignored by us.

Def get_evil_eval(url):

Return len(re.findall("(eval)", url, re.IGNORECASE))

If there is no value, such as a sensitive directory guessing attack, then what to do, our approach is to treat it separately, remove invalid data such as flights.ctrip.com, take the entire uri to mention features.

Suppose we specify five features, namely script, eval, single quotes, double quotes, and the number of left parentheses, then the above attack statement is converted to [0,1,0,0,2]

Finally, we get an attack statement with a 5-dimensional feature, label label=1, and normal traffic label=0. Thus, a request is converted into a 1*n matrix, and m training samples are m*n input models.

However, after the first version was released, although the message queue consumption speed has increased significantly, the recognition rate is basically OK, but we still give up the feature extraction method of this regular matching statement. Here are the reasons:

In this way, using regulars to extract features, there will always be missing keywords, and they will fall into the trap of missing traps.

Optimizing features is cumbersome. For example, adding a feature dimension will increase false positives. If you remove it, it will increase false negatives.

When predicting, it is still necessary to pass the request statement once again into a digital vector feature, reducing engine efficiency.

We got the inspiration to use machine learning to do emotional classification. After verifying the data 1 https://github.com/jeonglee/ML, we decided to replace the regular extraction feature and use tfidf to extract features.

We believe that the emotional second classification and the black-and-white traffic classification are similar in nature. The former gives a sentence such as "Tom, you are not a good boy!" to judge whether it is positive or not, and our statement is not so positive. Or negative emotional words, more English punctuation and some suspected high-risk words such as select, then we replace the concept, high-risk English punctuation is like a negative emotional word, other words are like neutral words, and thus our The problem becomes a two-category "neutral statement and malicious statement."

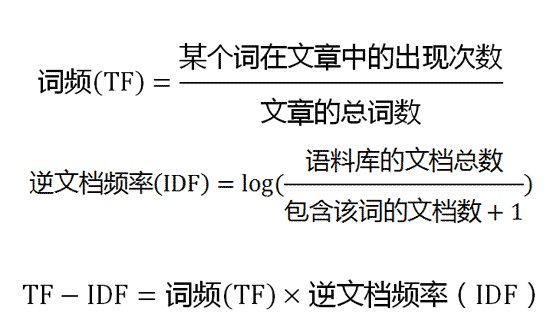

Here is a brief introduction to tfidf. For more details, please refer to https://en.wikipedia.org/wiki/Tfidf.

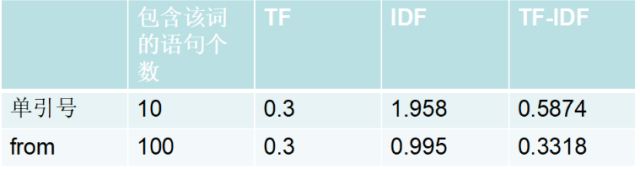

For example, we have 1000 get request statements. The first statement has a total of 10 words, of which there are 3 single quotes and 3 from. 10 of the 1000 statements contain single quotes, 100 contain from, and tfidf is calculated as follows (before tfidf calculation, we need to deal with punctuation and special characters in the sentence, such as conversion to string type, specific reference 1 ):

Calculation result: tfidf=0.587 > from trefdf=0.3318 of single quotes

The main idea of ​​TFIDF is that if a word or phrase appears in an article and rarely appears in other articles, the word or phrase is considered to have a good class distinguishing ability and is suitable for classification. This is basically consistent with our brain judgment. The tfidf value of single quotes is larger, and it is more representative of whether a sentence is an attack statement than from.

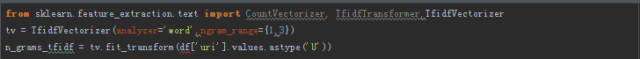

The code demo is as follows:

The reason why we take ngram_range={1,3} is because we want to save the order relationship between the words before and after as part of the feature. For example, the dimension feature in the previous "Tom, you are not a good boy!" is [not, a , good], and then calculate the tfidf of this "collection word". Of course, you can take features based on char. The specific parameter widths need to be experimented to prove which one works best. As for the stop words, how to convert punctuation, etc., you can refer to https://github.com/jeonglee/ML/blob/master/spark/NaiveBayes/src/main/java/WordParser.java, so I won't go into details here.

Sample data cleaning:

Although we have already figured out how to extract features, the modeling seems to be ok. At this time, we ask ourselves a question: What is the coverage of the training data, and is the label of the original training data accurate? If our own training samples are not pure, the results will not be satisfactory. Let's talk about what we did in the sample cleaning:

Optimize the existing detection rules: When white.txt and black.txt are opened, we observe it with the naked eye and find a lot of errors, so we have the need for optimization in our regular engine itself.

Join the dynamic ip blacklist, collect its attack logs, and add black samples. After our observation, we found that this ip, which is continuously scanned by the scanner, accounts for more than 99% of black traffic.

Regarding the white sample, we can take the original flow directly as the white sample data according to the time period, because after all, the white sample accounts for more than 99.99% of the mirrored flow.

The sample is deduplicated, the same request content statement is deduplicated

Some encryption requests, removed from the sample based on the parameter name

Self-built black vocabulary, put it in the white sample to match whether to hit the lexical content, and find the sample with obvious error. For example, create a black vocabulary [base64_decode, onglcontext, img script, struts2....], and then put it in the white sample to find the matching sentence, and remove it. In fact, there are many places where this method can be applied. For example, the robotic customer service of the tourism industry can use the hotel's keywords to go to the train ticket sample to clean the data. We are also inspired by this.

Feature cleaning accounts for more than 60% of our workload. It is also an inevitable process of continuous optimization. It is a physical activity that cannot be avoided.

Feature normalization: Since we have adopted tfidf here, we have not used normalization here, because the word frequency tf has the effect of preventing the normalization of biased long sentences. Here again, if you use the first version of the regular feature to be used, you must use feature normalization. For specific reasons and normalization, please refer to http://blog.csdn.net/leiting_imecas/article/details/54986045.

Training models and evaluating model effects

Preliminary evaluation of the sklearn training model is very simple. Here we cross-training, take 50% of the data training, 50% of the data to test, see if the effect is in line with expectations.

If the result of cross-training is not satisfactory at this time, there are three general reasons, and generally the following first and second reasons lead to deviation from the expected result. We believe that the algorithm is only the icing on the cake, and the quality of the feature engineering and the sample is accurate. The key to the rate.

There is a problem with feature extraction. There is no way to do this. It is based entirely on the knowledge domain of a specific range of individuals.

There is a problem with the training sample, there are more error labels, or the sample is not balanced.

Algorithm and selected training parameters need to be optimized

The first two have been introduced, let's talk about how to optimize the parameters, here we introduce the use of GridSearchCV in sklearn, the basic principle is to systematically traverse a variety of parameter combinations, through cross-validation to determine the best effect parameters, refer to the official use example http://scikit-learn.org/dev/modules/generated/sklearn.grid_search.GridSearchCV.html.

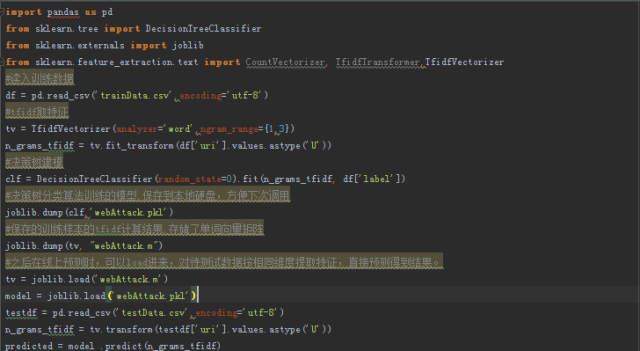

After the cross training reaches the psychological expectation, we store the trained local model on the hard disk for the next direct load use.

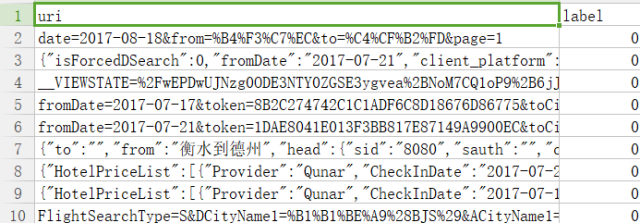

The demo code for training and online prediction is as follows. First, we store the black and white samples in trainData.csv, respectively under the uri and label tags.

Figure 5: Training sample data csv storage format

At this time, if we use the verification data of known tags to evaluate our machine learning model, we recommend using the confusion matrix as the criterion.

#expected is the tag value, predicted is the result of the model prediction

Print("Confusion matrix:%s" % metrics.confusion_matrix(expected,predicted))

Output:

Confusion matrix:

[[ 1 0]

[ 4226 65867]]

Probably explain the results of the confusion matrix:

| The true situation | forecast result | |

| Positive example | Counterexample | |

| Positive example | TP, the actual positive prediction is positive | FN, the actual positive prediction is negative |

| Counterexample | FP, the actual negative prediction is positive | TN, the actual negative prediction is negative |

Since our verification data set has only one normal traffic, we see that FN is 0. We are more concerned about the situation where malicious traffic is recognized as normal traffic (missing). We can see that there are 4226 missing reports. If you want to calculate the false negative rate, you can use the following indicators.

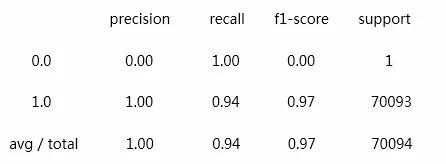

Print("Classification report for classifier %s:%s"% (model, metrics.classification_report(expected, predicted))

Output:

Recall rate: Recall=TP/ (TP+FN)

Accuracy: Accuracy=(TP+TN)/ (TP+FP+TN+FN)

Precision rate: Precision=TP/ (TP+FP)

F1-score is the harmonic mean of the recall rate and accuracy, and assuming the two are equally important, the formula is:

F1-score=(2*Recall*Accuracy) / (Recall+Accuracy)

Obviously, our recall rate here is 0.94, which means that our false negative rate is 6%. It is barely acceptable and needs continuous optimization.

Online application and continuous optimization

Online application, that is, embedding the built model into our existing nile framework, and need to set up a one-button switch machine learning engine, as well as a regular one-button switch, for some often missed reports directly Advanced regular engine, of course, the number of regular rules need to be constrained, otherwise it will go back to the dead end of regular detection. Later we need to continuously observe the output, constantly automate the supplementary rules, and automatically train the new model.

Referring to the nile framework mentioned above, the biggest problem currently encountered: how do we face the missed attack traffic, and whether this risk can be accepted. I have not thought of a good solution yet.

In the final analysis, we still think that feature extraction is the most influential factor on the accuracy of the model. The feature engineering is a dirty work, and the time spent on it is far greater than other steps. It requires more engineers and often requires a lot of professional knowledge. Experience and keen intuition, plus some "inspiration." It can be said that good features can achieve better results even with poor algorithms or parameters. Because good features mean closer to the nature of real problems. In addition, there is no engineer who is diligent in washing data.

Future prospects

At present, our information security application in machine learning still has the following potentials:

For non-standard json, xml data packets, because the data is long, punctuation, and some are non-standard structures, such as json structure can not be smoothly opened, resulting in errors in the prediction results.

By adding multiple categories, you can identify the types of different web attacks to better integrate with hulk.

In other applications, such as random domain name detection, ugc malicious comments, erotic image recognition, etc., we have already started practice in this area.

Replace the spark mllib library with the spark ml library.

The last sentence concludes that the road has just begun.

Ejector Header Connector,Ejectors Header Smt Type Connectors,Ejectors Four Row Foot Type Connector,Ejector Wire To Board Connector

Shenzhen Hongyian Electronics Co., Ltd. , https://www.hongyiancon.com